Blog Archive

Powered by Blogger.

Tuesday, September 28, 2010

Who’s who

All three tools’s queries or sentences, from Hive, HBase & Pig, they compile to MapReduce collection jobs, they handle their design and weaving as well as their results and internalization, they expect to Save You Time, plenty of headaches, as avoiding code-scattering, highly coupled functions, that you can step into if you have to design complex chaining jobs, sharing states of information, or your own database tool, by only writing Java jobs implementing MapReduce contracts.

Instead, you can use them for the different purposes they were designed for.

Pig is recomended for chewing and manipulating some semi-structured data, also for discovering, parsing, etc. It also has some primitive collection datatypes, and Zebra works integrated with him as a simple database facility, to avoid unnecesary custom data persistance mechanisms.

For Hive and HBase you’ll need the data well structured and clean, do not even think about using them with raw data.

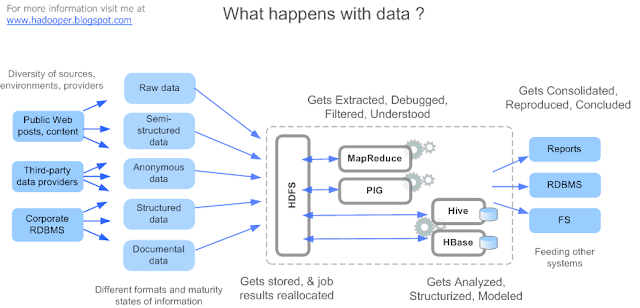

This’s one possible scenario, where varying several sources of data, in different formats or maturity levels, may convey into the hadoop distributed filesystem, where is it chewed by custom jobs or scripts. Then, it may be used as structured information, for later higher level purposes.

(Click to enlarge)

So, you’ll have to balance depending of your requirements, between faster but too simple logical tasks in MapReduce (chaining them will be a pronostic), and less faster but easier evolving design with clean code using Hive Queries or HBase methods. Sometimes you wont have to decide, write Java code in an MR approach may be a nightmare, you may end up writing too many simple routines hiding the real business purpose of their existance.

Still, although Pig is one of the most used methods for processing data, MapReduce is also commonly used for it’s simplicity, performance, and because it’s the base of a programming paradigm to sinthetize vast data into the information we need to get. Sometimes too, huge sizes of data are required to process for very simple tasks, and you wont need to prepare for a database design or strategy using Hive or HBase, etc.

A Markitecture

I want to show here a MapReduce-HDFS projects centered architecture platform. I left ZooKeeper out of this picture cause it’s not integrated to MapReduce nor HDFS, but only used by Hbase, the same with Avro that’s rather a backend library used by the core.

A Resume how they all interact, which languages they use, how the platform deploys their processes

(Click to enlarge)

I’ll be posting individual diagrams for them all.

Subscribe to:

Posts (Atom)